About 100 million users around the world that use this app. GFE reports back telemetry about what users environments look like, where they're from, what games they have, how long they're playing, etc. They have a lot of data from customers and GFE. GeForce Business - Using DL for product forecasting.

bad chip design based on feeding it diagrams of chip designs.

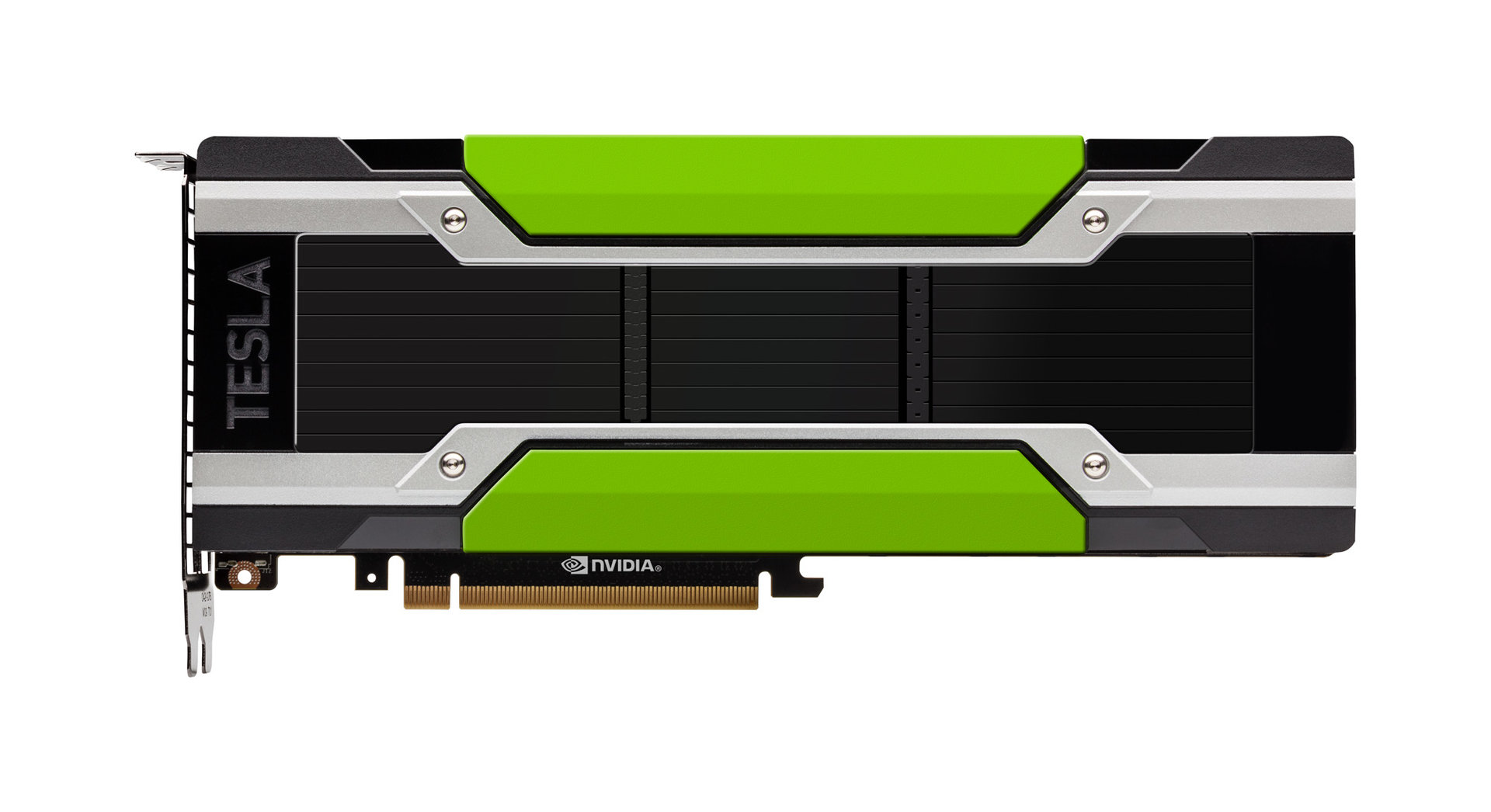

#TESLA P100 FP64 MANUAL#

They’re trying to replace some of the manual work (i.e. Autonomous driving is using large data sets of video, generating about a TB/day. NVIDIA – How we are using DGX SATURNV: DLAR and Autonomous Driving –Multiple teams using DL to train algorithms for self-driving cars.

#TESLA P100 FP64 SOFTWARE#

The power of 125 DGX-1 nodes coupled with NVIDIA’s deep learning software stack enables AI-accelerated analytics, provides faster data processing, speeds time to insights and knowledge, and allows visualization of large amounts of datasets like never before. This is the first and only supercomputer to include 1000 NVIDIA Pascal™-powered Tesla® P100 accelerators connected by NVIDIA NVLink™ technology, delivering groundbreaking performance at scale. Consuming 10 GFLOPS per watt, it is 7x more efficient than Xeon Phi System, and it peaks at 5 Petaflops for FP64 and 20 Petaflops for DL FP16 performance. NVIDIA DGX SATURNV boasts the titles of fastest AI supercomputer in the Top500, and is #1 on the Green500 list. Not only is it the world’s greenest supercomputer, but it’s the world’s fastest for deep learning training and AI development. GPU Server CPU Server # CPU Servers To Match 1 GPU Server $ spend on CPU Servers $ Saved with gpu Servers LAMMPS $32K $8K 11 CPU Nodes $88K $56K (64% savings) Caffe-AlexNet 21 CPU Nodes $168K $136K (80% savings) SATURNV Positioning: NVIDIA DGX SATURNV has the computing power of over 30,000 x86 servers for unparalleled deep learning and AI analytics performance. Single P100 PCIe Server Vs Lots of Weak Servers LAMMPS- Molecular Dynamics 4x Tesla P100 PCIe Server Faster than 8 CPU Servers Caffe AlexNet- Deep Learning 4x Tesla P100 PCIe Server Faster than 16 CPU Servers 4x P100 4x P100 Speed-up vs CPU Servers Speed-up vs CPU Servers # of CPU Servers # of CPU Servers LAMMPS Dataset: EAM CPU:Dual E V4 GPU: P100 PCIe Caffe AlexNet scaling data: Their app is to look at that date, combine with macroeconomic trends, and help them forecast what their sales will be by product.Ĥ Most Applications Don’t Scale perfectly

How Tesla Saves Money GPU Server 4x P100 (PCIe) CPU SERVER (Dual Socket) GPU SERVER Speed-up SIMPLE Cost of CPU SERVERS $ Saved with gpu SERVERS LAMMPS $32K $8K 10x $80K (10 Servers) $48K (60% savings) Caffe-AlexNet 16x $112K (16 Servers) (71% savings) SATURNV Positioning: NVIDIA DGX SATURNV has the computing power of over 30,000 x86 servers for unparalleled deep learning and AI analytics performance. Presentation on theme: "Tesla P100 Performance Guide"- Presentation transcript:Ģ Customer reference point is Server NodEĢx CPU: $4K Memory: $1K Interconnect NIC: $1K Rack Level/Misc Costs: $2K Server: $8K 4x GPU: $24K Server Cost: $8K GPU Server Cost: $32K Server They’ve Known for 20 Years Server with GPUs

0 kommentar(er)

0 kommentar(er)